By Dana Tofig, Communications Director, Institute of Education Sciences

In today’s increasingly global economy, there is a lot of interest in understanding how students in the United States (U.S.) are performing compared to their peers around the world. That is why the National Center for Education Statistics participates in and conducts several international assessments. One of those assessments—the Trends in International Mathematics and Science Study (TIMSS) Advanced—gives us a unique opportunity to see how our advanced students are performing in rigorous mathematics and physics classes as they complete high school. TIMSS Advanced is part of a broader data collection that also assesses the performance of 4th- and 8th-grade students in mathematics and science, the results of which are summarized in another blog entry.

The TIMSS Advanced 2015 was administered to students from nine education systems that were in their final year of secondary school who had taken or were taking advanced mathematics or physics courses. In the U.S., the TIMSS Advanced was given to over 5,500 students in Grade 12 who were taking or had taken advanced mathematics courses covering topics in geometry, algebra and calculus, or a second-year physics course. The last time that the U.S. participated in TIMSS Advanced was 1995.

What Percentage of Students Take Advanced Mathematics and Physics?

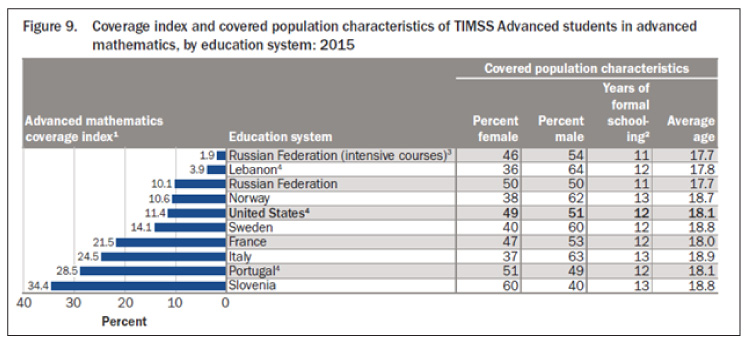

Among the nine education systems participating in TIMSS Advanced 2015, the percentage of the corresponding age cohort (18-year-olds in the U.S.) taking advanced mathematics varies widely. This percentage, which TIMSS calls the “coverage index,” ranges from a low of 1.9 percent to a high of 34.4 percent. The U.S. falls in the middle, with 11.4 percent of 18-year-olds taking advanced mathematics courses. The U.S. advanced mathematics coverage index in 2015 has nearly doubled since 1995, when it was 6.4 percent.

In the U.S. and two other participating systems—Portugal and Russian Federation—the students taking advanced mathematics were split fairly evenly between male and female. In the remaining systems, the students in the coverage index were majority male, except for Slovenia, where 60 percent were female. Interestingly, Slovenia had the highest coverage index, at 34.4 percent.

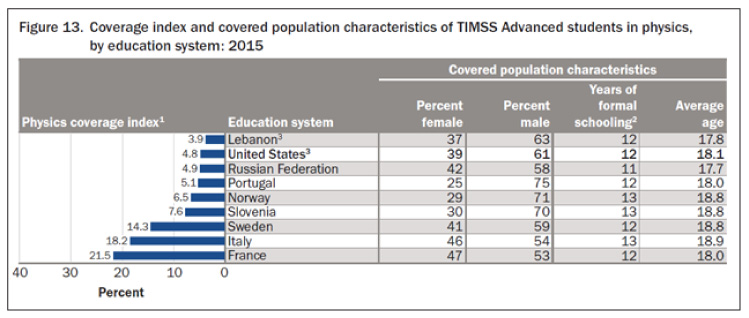

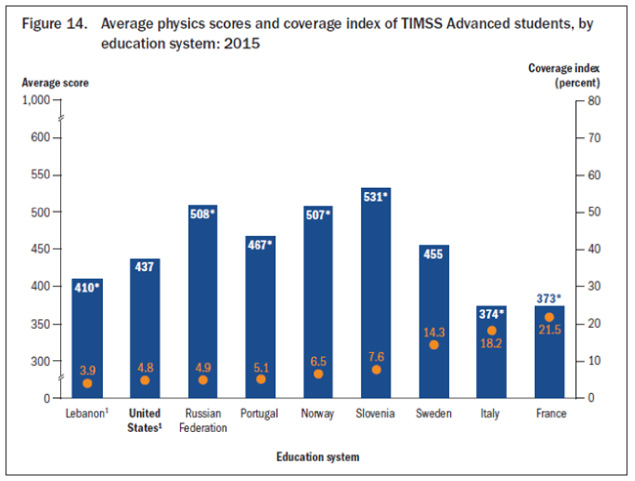

It’s a different story in science for the U.S. Among 18-year-olds in the U.S., 4.8 percent took Physics, which was among the lowest for the nine systems participating in TIMSS Advanced. Only Lebanon (3.9 percent) had a lower percentage, while France had the highest coverage index at 21.5 percent. Males made up a majority of physics students in all nine participating systems, including the U.S.

How Did U.S. Students Perform in Advanced Mathematics?

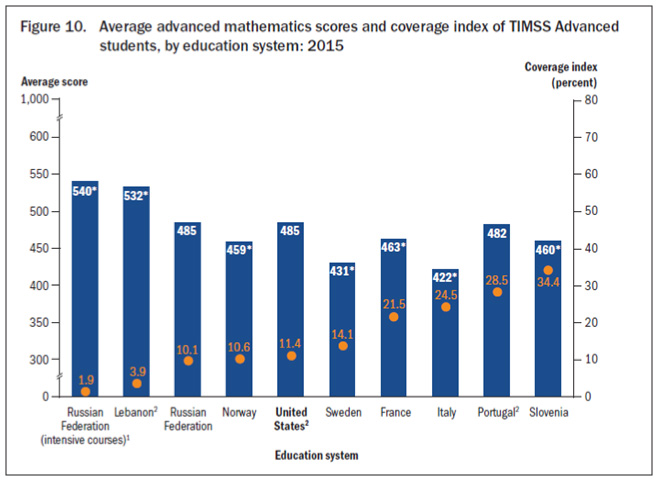

U.S. students scored 485 on TIMSS Advanced 2015 in advanced mathematics, which is not significantly different from the average U.S. score in 1995. It should be noted that on TIMSS 2015, given to a representative sample of fourth- and eighth-graders across the U.S., mathematics scores for both grades increased significantly from 1995 to 2015.

On TIMSS Advanced 2015 in advanced mathematics, two systems scored significantly higher than the U.S. (Lebanon and Russian Federation students who took intensive courses[1]) while five systems scored significantly lower (Norway, Sweden, France, Italy and Slovenia). The remaining two systems scored about the same as the U.S.

How Did U.S. Students Perform in Physics?

U.S. students scored 437 on TIMSS Advanced 2015 in physics, which was not statistically different than in 1995. No education system did better on physics in 2015 than 1995, but several did worse—four of the six systems that took the TIMSS Advanced in both 1995 and 2015 saw a significant drop in their scores.

Four of the nine countries participating in TIMSS Advanced 2015 in physics had a score that was significantly higher than the U.S. (Russian Federation, Portugal, Norway, and Slovenia) and three countries scored significantly lower than the U.S. (Lebanon, Italy and France). Sweden’s physics score was not significantly different than the U.S.

A Note about Interpretation

It’s important to remember that there are differences in student characteristics and the structure of the various education systems that participated in TIMSS Advanced 2015. Those differences should be kept in mind when interpreting results.

[1] Intensive courses are advanced mathematics courses that involve 6 or more hours per week. Results for students in these courses are reported separately from the results for other students from the Russian Federation taking courses that involve 4.5 hours per week.