Weighted student funding (WSF) is a funding method that aims to allocate funding based on individual student needs. While large districts are increasingly using WSF systems, little research exists to assess their effectiveness. In this guest blog, Dr. Marguerite Roza, Georgetown University, discusses her team’s ongoing IES-funded research study that seeks to document and understand WSF designs and features as implemented in the field, and to gauge the extent to which WSF designs are associated with reducing achievement gaps.  The study’s initial findings chart the WSF landscape across 19 U.S. school districts that used WSF in 2017-18.

The study’s initial findings chart the WSF landscape across 19 U.S. school districts that used WSF in 2017-18.

Over the last two decades, dozens of big districts (including those in New York City, Boston, Denver, Houston, and Chicago) have shifted to using a weighted student formula to distribute some portion of their total budget. Instead of distributing resources via uniform staffing formulas, these districts use a student-based formula to allocate some fixed sum of dollars to schools for each student based on need (for example, allocations are typically higher for students with disabilities and students with limited English proficiency). The 2015 Every Student Succeeds Act (ESSA) authorized a WSF pilot, allowing up to 50 districts to incorporate key federal program dollars into a district’s formula.

As WSF systems now serve millions of K–12 students—and the number of WSF districts continues to grow—our research begins to document the range of these WSF formulas and gather details around how they are being implemented in school systems around the nation.

Why do districts adopt WSF?

Our study of school board and budget documentation indicates that nearly all districts identify equity (89%) and flexibility for school principals (79%) as a key rationale, with nearly half also citing a goal of transparency (49%). Interestingly, not one of the 19 districts cite “choice” (whereby families choose their school) as a driving factor in the rationale for using WSF even though much of the literature links choice and WSF. Despite the goal of transparency, only a third of the districts actually post their formulas online (like this posting from Houston ISD)—a finding that surprised us and them. In fact, after we shared the finding with our study districts, several updated their online budget materials to include their formulas. Whether districts are meeting their goals of equity and flexibility will be more fully investigated in Phase 2 of the project.

Is there a typical WSF model that districts are using?

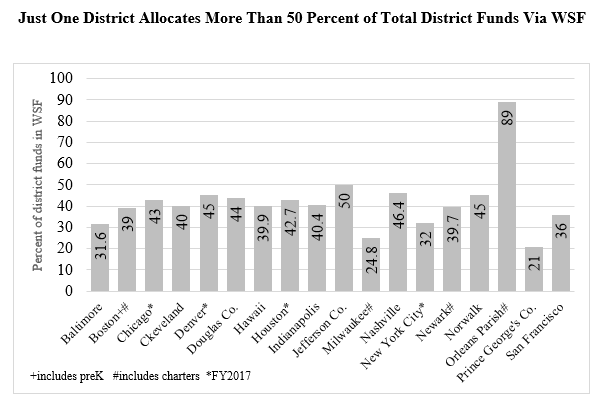

No. We find that there is no standard WSF: Each district has developed a home-grown formula and differences are substantial. On one end of the spectrum, Prince George’s County deploys only 20% of its total budget via its WSF, while Orleans Parish deploys 89%. Most districts deploy some 30-50% of their annual funds via their WSF formula, indicating that they are adopting a hybrid approach. They deploy the rest of their funds via staff allocations, program allocations, or in whatever ways they did before moving to WSF.

Districts define their “base” allocations differently, and no two districts use the same student weights. Most commonly, districts use grade level as a student weight category, but they do not agree on which level of schooling warranted the highest weight. Seven districts give their highest grade-level weight to elementary grades, four give it to middle school grades, and four give the highest weight to high schoolers.

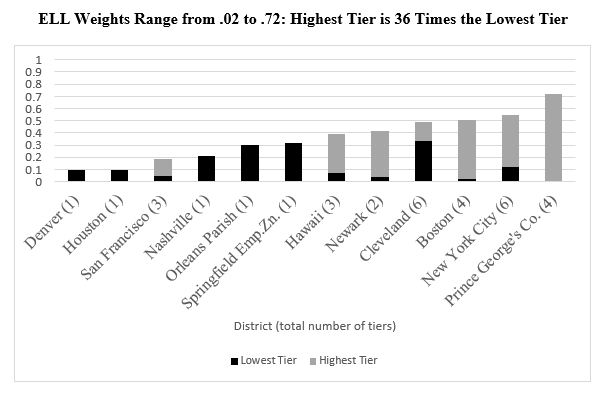

Two thirds of districts use weights for students identified as English Language Learners (ELL) and as having disabilities, while half use weights for poverty. Even the size of the weights differs, with ELL weights ranging from 10% to 70%. Several districts use tiered weights.

We also found a range of unique weights designed within the districts for categories of locally identified need (for example, Boston uses a weight for students with interrupted formal learning, and Houston uses a weight for students who are refugees).

What other trends exist in districts implementing WSF?

We found that non-formula features and exemptions reflect local context. Small school subsidies, magnet allocations, and foundation amounts are common examples of non-formula features that several districts use. Some districts exempt some schools from the formula, grant weights for school types (vs student types), or fund selected staffing positions outside the formula. Districts seem to be layering their WSF formulas on top of long-standing allocations, like subsidies for small schools. Clearly, it is difficult for most districts to deploy a strict formula, and these exemptions or adjustments have the effect of mitigating the formula’s effects on some schools.

We also found that nearly all districts continue to use average salaries in their budgeting, likely limiting their goals for equity. In this practice, schools are charged for their teaching staff based on district-wide average salaries, not the actual salaries of teachers in the building. Districts in Boston and Denver have experimented with the use of real salaries for a subset of their schools (allowing for roughly one-third of their schools to budget and account for spending based on actual salaries). Both the formula exceptions and this continued reliance on average salaries may be limiting the extent to which WSF is making progress on equity. Analysis in Phase 2 of the project will quantify the effects of these formula adjustments on spending.

What kinds of budget flexibilities do principals have?

With WSF, districts give principals flexibility in staffing, stipends, and contracts, but not base compensation. In virtually all WSF districts, principals had at least some flexibility in choosing the number and type of staff in their buildings and in awarding stipends. Interestingly, most principals had power to issue contracts with their funds, and half could carry over funds from one year to the next. Despite these flexibilities, base teacher compensation is generally off limits for principals and continues to be controlled centrally.

How difficult is it for districts to design and implement their own versions of WSF?

Changing district allocations is hard work. At each point in our study, we find districts building “homegrown” approaches to WSF that reflect their own spending history and local context. We could see this as a practical transition of sorts between old and new allocation strategies, where district leaders straddle both the desires to change allocations and the pressures to keep allocations the way they are.

What are the next steps in this research?

Future analysis in this project will explore the degree to which WSF is delivering on the goal of increasing equity and outcomes for poor and at-risk students. However, the homegrown nature of WSF makes it tough to generalize about the WSF model or its effects. Undoubtedly, the variation poses problems for research. Clearly there’s no way to analyze WSF as a single model. Also challenging is that districts use different definitions (even on formula items such as the “base” and what constitutes a student weight). Perhaps this is unsurprising as there is no common training on the WSF model, and no prevailing terminology or budgeting procedures for district leaders to use in their work.

We see our study as a first step in a broader research agenda that will explore the scope and range of implementation of WSF in U.S. school districts and offer deeper analysis of the extent to which WSF is helping systems meet commonly cited goals of greater equity, flexibility and transparency. Meantime, we hope WSF systems and those considering shifting to WSF will be able to learn from this work and what peer systems are doing, perhaps with the ultimate effect of creating a common vocabulary for this financial model.